How Digital Cameras Work

A photosites that are sensitive to light. Each photosite is usually called a pixel, a contraction of "picture element". There are millions of these individual pixels in the sensor of a DSLR camera.

Digital cameras sample light from our world, or outer space, spatially, tonally and by time. Spatial sampling means the angle of view that the camera sees is broken down into the rectangular grid of pixels. Tonal sampling means the continuously varying tones of brightness in nature are broken down into individual discrete steps of tone. If there are enough samples, both spatially and tonally, we perceive it as faithful representation of the original scene. Time sampling means we make an exposure of a given duration.

Our eyes also sample the world in a way that can be thought of as a "time exposure", usually on a relatively short basis of a few tenths of a second when the light levels are high as in the daytime. Under low light conditions, the eye's exposure, or integration time can increase to several seconds. This is why we can see more details through a telescope if we stare at a faint object for a period of time.

The eye is a relatively sensitive detector. It can detect a single photon, but this information is not sent along to the brain because it does not exceed the minimum signal-to-noise ratio threshold of the noise filtering circuitry in the visual system. It requires several photons for a detection to be sent to the brain. A digital camera is almost as sensitive as the eye, and both are much more sensitive than film, which requires many photons for a detection.

It is the time sampling with long exposures that really makes the magic of digital astrophotography possible. A digital sensor's true power comes from its ability to integrate, or collect, photons over much longer time periods than the eye. This is why we can record details in long exposures that are invisible to the eye, even through a large telescope.

Each photosite on a CCD or CMOS chip is composed of a light-sensitive area made of crystal silicon in a photodiode which absorbs photons and releases electrons through the photoelectric effect. The electrons are stored in a well as an electrical charge that is accumulated over the length of the exposure. The charge that is generated is proportional to the number of photons that hit the sensor.

This electric charge is then transferred and converted to an analog voltage that is amplified and then sent to an Analog to Digital Converter where it is digitized (turned into a number).

CCD and CMOS sensors perform similarly in absorbing photons, generating electrons and storing them, but differ in how the charge is transferred and where it is converted to a voltage. Both end up with a digital output.

The entire digital image file is then a collection of numbers that represent the location and brightness values for each square in the array. These numbers are stored in a file that our computers can work with.

The entire photosite is not light sensitive. Only the photodiode is. The percentage of the photosite that is light sensitive is called the fill factor. For some sensors, such as CMOS chips, the fill factor may only be 30 to 40 percent of the entire photosite area. The rest of the area on a CMOS sensor is comprised of electronic circuitry, such as amplifiers and noise-reduction circuits.

Because the light-sensitive area is so small in comparison to the size of the photosite, the overall sensitivity of the chip is reduced. To increase the fill factor, manufacturers use micro-lenses to direct photons that would normally hit non-sensitive areas and otherwise go undetected, to the photodiode.

Electrons are generated as long as photons strike the sensor during the duration of the exposure or integration. They are stored in a potential well until the exposure is ended. The size of the well is called the full-well capacity and it determines how many electrons can be collected before it fills up and registers as full. In some sensors once a well fills up, the electrons can spill over into adjacent wells, causing blooming, which is visible as vertical spikes on bright stars. Some cameras have anti-blooming features that reduce or prevent this. Most DSLR cameras control blooming very well and it is not a problem for astrophotography.

The number of electrons that a well can accumulate also determines the sensor's dynamic range, the range of brightness from black to white where the camera can capture detail in both the faint and bright areas in the scene. Once noise is factored in, a sensor with a larger full-well capacity usually has a larger dynamic range. A sensor with lower noise helps improve the dynamic range and improves detail in weakly illuminated areas.

Not every photon that hits a detector will register. The number that are detected is determined by the quantum efficiency of the sensor. Quantum efficiency is measured as a percentage. If a sensor has a quantum efficiency of 40 percent, that means four out of every ten photons that hit it will be detected and converted to electrons. According to Roger N. Clarke, the quantum efficiencies of the CCDs and CMOS sensors in modern DSLR cameras is about 20 to 50 percent, depending on the wavelength. Top-of-the-line dedicated astronomical CCD cameras can have quantum efficiencies of 80 percent and more, although this is for grayscale images.

The number of electrons that build up in a well is proportional to the number of photons that are detected. The electrons in the well are then converted to a voltage. This charge is analog (continuously varying) and is usually very small and must be amplified before it can be digitized. The read-out amplifier performs this function, matching the output voltage range of the sensor to the input voltage range of the A-to-D converter. The A/D converter converts this data into a binary number.

When the A/D converter digitizes the dynamic range, it breaks it into individual steps. The total number of steps is specified by the bit depth of the converter. Most DSLR cameras work with 12 bits (4096 steps) of tonal depth.

The sensor's output is technically called an analog-to-digital unit (ADU) or digital number (DN). The number of electrons per ADU is defined by the gain of the system. A gain of 4 means that the A/D converter digitized the signal so that each ADU corresponds to 4 electrons.

The ISO rating of an exposure is analogous to the speed rating of film. It is a general rating of the sensitivity to light. Digital camera sensors really only have one sensitivity but allow use of different ISO settings by changing the gain of the camera. When the gain doubles, the number of electrons per ADU goes down by a factor of 2.

As ISO is increased in a digital camera, less electrons are converted into a single ADU. Increasing ISO maps a smaller amount of dynamic range into the same bit depth and decreases the dynamic range. At ISO 1600, only about 1/16th of the full-well capacity of the sensor can be used. This can be useful for astronomical images of dim subjects which are not going to fill the well anyway. The camera only converts a small number of electrons from these scarce photons, and by mapping this limited dynamic range into the full bit depth, greater differentiation between steps is possible. This also gives more steps to work with when this faint data is stretched later in processing to increase the contrast and visibility.

For every pixel in the sensor, the brightness data, represented by a number from 0 to 4095 for a 12-bit A/D converter, along with the coordinates of the location of the pixel, are stored in a file. This data may be temporarily stored in the camera's built-in memory buffer before it is written permanently to the camera's removable memory card.

This file of numbers is reconstructed into an image when it is displayed on a computer monitor, or printed.

It is the numbers that are produced by the digitization process that we can work with in our computers. The numbers are represented as bits, a contraction of "binary digits". Bits use base 2 binary notation where the only numbers are one and zero instead of the base 10 numbers of 0 through 9 that we usually work with. Computers use binary numbers because the transistors that they are made of have only two states, on and off, which represent the numbers one and zero. All numbers can be represented in this manner. This is what makes computers so powerful in dealing with numbers - these transistors are very fast.

Spatial Sampling

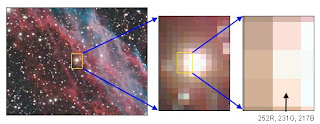

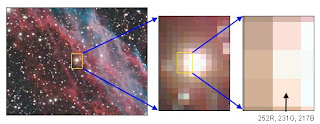

Photosites in the sensor in the camera correspond one to one with the pixels in the digital image when it is output. Many people also call the photosites in the sensor in the camera by the generic term "pixels". These photosites are arranged in a rectangular array. In the Canon 20D, the array is 3504 x 2336 pixels, which is a total of 8.2 million pixels. This grid can be imagined as a chess board where each square is very small. The squares are so small that when viewed from a distance they fool the eye and brain into thinking the image is a continuous tone image. If you enlarge any digital image to a big enough size, you will be able to see the individual pixels. When this happens, we call the image "pixelated".

A digitized image is made up of a grid of pixels which are represented by numbers. The numbers specify the pixel's location in the grid, and the brightness of the red, green and blue color channels.

Color images are actually made up of three individual channels of black and white information, one each for red, green and blue. Because of the way the eye and brain sense color, all of the colors of the rainbow can be re-created with these three primary colors.

Although the digital camera can record 12 bits or 4096 steps of brightness information, almost all output devices can only display 8 bits or 256 steps per color channel. The original 12-bit (212 = 4096) input data must be converted to 8-bits (28 = 256) for output.

In the above example, the indicated pixel has a brightness level of 252 in the red channel, 231 in the green channel, and 217 in the blue channel. Each color's brightness can range from 0 to 255, for 256 total steps in each color channel when it is displayed on a computer monitor, or output to a desktop printer. Zero indicates pure black, and 255 indicates pure white.

256 colors each of red, green and blue may not seem like a lot, but actually it is a huge number because 256 x 256 x 256 = more then 16 million individual colors.

Computers and Numbers

Because computers are very powerful at manipulating numbers, we can perform different operations on these numbers quickly and easily.

For instance, contrast is defined as the difference in brightness between adjacent pixels. For there to be contrast, there must be a difference to start with, so one pixel will be lighter and one pixel will be darker. We can very easily increase the contrast by simply adding a number to the brightness value of the lighter pixel, and subtracting a number from the brightness value of the darker pixel.

Color in an image is represented by the brightness value of a pixel in each of three color channels - red, green and blue - that constitute the color information. We can just as easily change the color of a pixel, or group of pixels, by just changing the numbers.

We can perform other tricks too, such as increasing the apparent sharpness of an image by increasing the contrast of the edge boundaries of objects in an image with a process called unsharp masking.

Having the image represented by numbers allows us to have a lot of control over it. And, because the image is a set of numbers, it can be exactly duplicated any number of times without any loss of quality.

Linear vs Non-Linear Data

The recording response of a digital sensor is proportional to the number of photons that hit it. The response is linear. Unlike film, digital sensors record twice the signal when twice the number of photons hit it. Digital sensors also do not suffer from reciprocity failure like most films.

The data produced by the CMOS sensor in a DSLR camera that is written to the raw file is linear. The linear data will usually look very dark and low in contrast compared to a normal photograph (see the image below).

Human visual perception of brightness is more similar to a logarithmic curve than a linear curve. Other human senses, such as hearing, and even taste, are also logarithmic. This means that we are better at sensing differences at the low end of the perceptual scale than we are at the high end. For example, we can very easily tell the difference between a one-pound weight and a two-pound weight when we pick them up. But we have a great amount of difficulty telling the difference between a 100-pound weight and a 101-pound weight. Yet the difference is the same, one pound.

Normal photographs produced on film are also recorded in a non-linear manner that is similar to the way human vision works. That's why we can hold up a slide to the light and it looks like a reasonable representation of the original scene without any further modifications.

Because the human visual perceptual system does not work in a linear manner, a non-linear curve must be applied to "stretch" the linear data from a DSLR camera to make the tonality of a photo match the way our visual system works. These non-linear adjustments are done by software inside the camera if the image is written to a JPEG file. If a raw file is saved in the camera, these non-linear adjustments are do ne in software later when the data is opened in an image processing program.

ne in software later when the data is opened in an image processing program.

Hold your mouse cursor over the image to see a comparison between the same data for a normal daytime image shown in Linear and Non-Linear, stretched form.

In the image examples seen above, a screen shot of Photoshop's Curves dialog has been included in the image so we can see a comparison between linear data and the same data with a non-linear curve applied to it. The curve in the dark image is linear, it is a straight line. The curve in the bright image shows the stretch that needs to be applied to the data to make it closer to our visual perception.

The curve represents the input and output brightness values of the pixels in the image. Black is at the lower left corner, and white at the upper right corner. Gray tones are in between. When the line is straight, the input tone, which runs horizontally along the bottom, matches the output tone, which runs vertically along the left side.

In the curve insert, when the straight line is pulled upwards so that it's slope is increased, the contrast of that portion of the curve and the corresponding tones in the image are increased. In the example image seen above, the tone at the indicated point is made much lighter. All of the tones in the image below this point on the curve, and the corresponding tones in the image, are stretched apart and their contrast increased.

This is why it is important to work in a high-bit depth when working with raw images. Because of the strong stretches and increases in contrast that are necessary, tones are pulled apart. If we have a lot of tones, which a high-bit depth allows, they will re-distribute smoothly. If we do not have a lot of tones to work with, we run the risk of posterization and banding when we stretch the data.

In the brightened image, the slope of the top portion of the curve decreases in the highlight areas of the image. This compresses tones and decreases the contrast in those tones in the image.

It is the fact that we can access this data in linear form in a high-bit depth that makes images from DSLR and CCD cameras so powerful for recording astrophotos. It allows us to subtract the sky background and light pollution. It gives us the ability to control the non-linear stretch adjustments to the data. These adjustments will bring out the details in the astronomical object that are hidden deep down in what we would consider to be the shadow areas of a normal photograph.

A photosites that are sensitive to light. Each photosite is usually called a pixel, a contraction of "picture element". There are millions of these individual pixels in the sensor of a DSLR camera.

Digital cameras sample light from our world, or outer space, spatially, tonally and by time. Spatial sampling means the angle of view that the camera sees is broken down into the rectangular grid of pixels. Tonal sampling means the continuously varying tones of brightness in nature are broken down into individual discrete steps of tone. If there are enough samples, both spatially and tonally, we perceive it as faithful representation of the original scene. Time sampling means we make an exposure of a given duration.

Our eyes also sample the world in a way that can be thought of as a "time exposure", usually on a relatively short basis of a few tenths of a second when the light levels are high as in the daytime. Under low light conditions, the eye's exposure, or integration time can increase to several seconds. This is why we can see more details through a telescope if we stare at a faint object for a period of time.

The eye is a relatively sensitive detector. It can detect a single photon, but this information is not sent along to the brain because it does not exceed the minimum signal-to-noise ratio threshold of the noise filtering circuitry in the visual system. It requires several photons for a detection to be sent to the brain. A digital camera is almost as sensitive as the eye, and both are much more sensitive than film, which requires many photons for a detection.

It is the time sampling with long exposures that really makes the magic of digital astrophotography possible. A digital sensor's true power comes from its ability to integrate, or collect, photons over much longer time periods than the eye. This is why we can record details in long exposures that are invisible to the eye, even through a large telescope.

Each photosite on a CCD or CMOS chip is composed of a light-sensitive area made of crystal silicon in a photodiode which absorbs photons and releases electrons through the photoelectric effect. The electrons are stored in a well as an electrical charge that is accumulated over the length of the exposure. The charge that is generated is proportional to the number of photons that hit the sensor.

This electric charge is then transferred and converted to an analog voltage that is amplified and then sent to an Analog to Digital Converter where it is digitized (turned into a number).

CCD and CMOS sensors perform similarly in absorbing photons, generating electrons and storing them, but differ in how the charge is transferred and where it is converted to a voltage. Both end up with a digital output.

The entire digital image file is then a collection of numbers that represent the location and brightness values for each square in the array. These numbers are stored in a file that our computers can work with.

The entire photosite is not light sensitive. Only the photodiode is. The percentage of the photosite that is light sensitive is called the fill factor. For some sensors, such as CMOS chips, the fill factor may only be 30 to 40 percent of the entire photosite area. The rest of the area on a CMOS sensor is comprised of electronic circuitry, such as amplifiers and noise-reduction circuits.

Because the light-sensitive area is so small in comparison to the size of the photosite, the overall sensitivity of the chip is reduced. To increase the fill factor, manufacturers use micro-lenses to direct photons that would normally hit non-sensitive areas and otherwise go undetected, to the photodiode.

Electrons are generated as long as photons strike the sensor during the duration of the exposure or integration. They are stored in a potential well until the exposure is ended. The size of the well is called the full-well capacity and it determines how many electrons can be collected before it fills up and registers as full. In some sensors once a well fills up, the electrons can spill over into adjacent wells, causing blooming, which is visible as vertical spikes on bright stars. Some cameras have anti-blooming features that reduce or prevent this. Most DSLR cameras control blooming very well and it is not a problem for astrophotography.

The number of electrons that a well can accumulate also determines the sensor's dynamic range, the range of brightness from black to white where the camera can capture detail in both the faint and bright areas in the scene. Once noise is factored in, a sensor with a larger full-well capacity usually has a larger dynamic range. A sensor with lower noise helps improve the dynamic range and improves detail in weakly illuminated areas.

Not every photon that hits a detector will register. The number that are detected is determined by the quantum efficiency of the sensor. Quantum efficiency is measured as a percentage. If a sensor has a quantum efficiency of 40 percent, that means four out of every ten photons that hit it will be detected and converted to electrons. According to Roger N. Clarke, the quantum efficiencies of the CCDs and CMOS sensors in modern DSLR cameras is about 20 to 50 percent, depending on the wavelength. Top-of-the-line dedicated astronomical CCD cameras can have quantum efficiencies of 80 percent and more, although this is for grayscale images.

The number of electrons that build up in a well is proportional to the number of photons that are detected. The electrons in the well are then converted to a voltage. This charge is analog (continuously varying) and is usually very small and must be amplified before it can be digitized. The read-out amplifier performs this function, matching the output voltage range of the sensor to the input voltage range of the A-to-D converter. The A/D converter converts this data into a binary number.

When the A/D converter digitizes the dynamic range, it breaks it into individual steps. The total number of steps is specified by the bit depth of the converter. Most DSLR cameras work with 12 bits (4096 steps) of tonal depth.

The sensor's output is technically called an analog-to-digital unit (ADU) or digital number (DN). The number of electrons per ADU is defined by the gain of the system. A gain of 4 means that the A/D converter digitized the signal so that each ADU corresponds to 4 electrons.

The ISO rating of an exposure is analogous to the speed rating of film. It is a general rating of the sensitivity to light. Digital camera sensors really only have one sensitivity but allow use of different ISO settings by changing the gain of the camera. When the gain doubles, the number of electrons per ADU goes down by a factor of 2.

As ISO is increased in a digital camera, less electrons are converted into a single ADU. Increasing ISO maps a smaller amount of dynamic range into the same bit depth and decreases the dynamic range. At ISO 1600, only about 1/16th of the full-well capacity of the sensor can be used. This can be useful for astronomical images of dim subjects which are not going to fill the well anyway. The camera only converts a small number of electrons from these scarce photons, and by mapping this limited dynamic range into the full bit depth, greater differentiation between steps is possible. This also gives more steps to work with when this faint data is stretched later in processing to increase the contrast and visibility.

For every pixel in the sensor, the brightness data, represented by a number from 0 to 4095 for a 12-bit A/D converter, along with the coordinates of the location of the pixel, are stored in a file. This data may be temporarily stored in the camera's built-in memory buffer before it is written permanently to the camera's removable memory card.

This file of numbers is reconstructed into an image when it is displayed on a computer monitor, or printed.

It is the numbers that are produced by the digitization process that we can work with in our computers. The numbers are represented as bits, a contraction of "binary digits". Bits use base 2 binary notation where the only numbers are one and zero instead of the base 10 numbers of 0 through 9 that we usually work with. Computers use binary numbers because the transistors that they are made of have only two states, on and off, which represent the numbers one and zero. All numbers can be represented in this manner. This is what makes computers so powerful in dealing with numbers - these transistors are very fast.

Spatial Sampling

Photosites in the sensor in the camera correspond one to one with the pixels in the digital image when it is output. Many people also call the photosites in the sensor in the camera by the generic term "pixels". These photosites are arranged in a rectangular array. In the Canon 20D, the array is 3504 x 2336 pixels, which is a total of 8.2 million pixels. This grid can be imagined as a chess board where each square is very small. The squares are so small that when viewed from a distance they fool the eye and brain into thinking the image is a continuous tone image. If you enlarge any digital image to a big enough size, you will be able to see the individual pixels. When this happens, we call the image "pixelated".

A digitized image is made up of a grid of pixels which are represented by numbers. The numbers specify the pixel's location in the grid, and the brightness of the red, green and blue color channels.

Color images are actually made up of three individual channels of black and white information, one each for red, green and blue. Because of the way the eye and brain sense color, all of the colors of the rainbow can be re-created with these three primary colors.

Although the digital camera can record 12 bits or 4096 steps of brightness information, almost all output devices can only display 8 bits or 256 steps per color channel. The original 12-bit (212 = 4096) input data must be converted to 8-bits (28 = 256) for output.

In the above example, the indicated pixel has a brightness level of 252 in the red channel, 231 in the green channel, and 217 in the blue channel. Each color's brightness can range from 0 to 255, for 256 total steps in each color channel when it is displayed on a computer monitor, or output to a desktop printer. Zero indicates pure black, and 255 indicates pure white.

256 colors each of red, green and blue may not seem like a lot, but actually it is a huge number because 256 x 256 x 256 = more then 16 million individual colors.

Computers and Numbers

Because computers are very powerful at manipulating numbers, we can perform different operations on these numbers quickly and easily.

For instance, contrast is defined as the difference in brightness between adjacent pixels. For there to be contrast, there must be a difference to start with, so one pixel will be lighter and one pixel will be darker. We can very easily increase the contrast by simply adding a number to the brightness value of the lighter pixel, and subtracting a number from the brightness value of the darker pixel.

Color in an image is represented by the brightness value of a pixel in each of three color channels - red, green and blue - that constitute the color information. We can just as easily change the color of a pixel, or group of pixels, by just changing the numbers.

We can perform other tricks too, such as increasing the apparent sharpness of an image by increasing the contrast of the edge boundaries of objects in an image with a process called unsharp masking.

Having the image represented by numbers allows us to have a lot of control over it. And, because the image is a set of numbers, it can be exactly duplicated any number of times without any loss of quality.

Linear vs Non-Linear Data

The recording response of a digital sensor is proportional to the number of photons that hit it. The response is linear. Unlike film, digital sensors record twice the signal when twice the number of photons hit it. Digital sensors also do not suffer from reciprocity failure like most films.

The data produced by the CMOS sensor in a DSLR camera that is written to the raw file is linear. The linear data will usually look very dark and low in contrast compared to a normal photograph (see the image below).

Human visual perception of brightness is more similar to a logarithmic curve than a linear curve. Other human senses, such as hearing, and even taste, are also logarithmic. This means that we are better at sensing differences at the low end of the perceptual scale than we are at the high end. For example, we can very easily tell the difference between a one-pound weight and a two-pound weight when we pick them up. But we have a great amount of difficulty telling the difference between a 100-pound weight and a 101-pound weight. Yet the difference is the same, one pound.

Normal photographs produced on film are also recorded in a non-linear manner that is similar to the way human vision works. That's why we can hold up a slide to the light and it looks like a reasonable representation of the original scene without any further modifications.

Because the human visual perceptual system does not work in a linear manner, a non-linear curve must be applied to "stretch" the linear data from a DSLR camera to make the tonality of a photo match the way our visual system works. These non-linear adjustments are done by software inside the camera if the image is written to a JPEG file. If a raw file is saved in the camera, these non-linear adjustments are do

ne in software later when the data is opened in an image processing program.

ne in software later when the data is opened in an image processing program.

Hold your mouse cursor over the image to see a comparison between the same data for a normal daytime image shown in Linear and Non-Linear, stretched form.

In the image examples seen above, a screen shot of Photoshop's Curves dialog has been included in the image so we can see a comparison between linear data and the same data with a non-linear curve applied to it. The curve in the dark image is linear, it is a straight line. The curve in the bright image shows the stretch that needs to be applied to the data to make it closer to our visual perception.

The curve represents the input and output brightness values of the pixels in the image. Black is at the lower left corner, and white at the upper right corner. Gray tones are in between. When the line is straight, the input tone, which runs horizontally along the bottom, matches the output tone, which runs vertically along the left side.

In the curve insert, when the straight line is pulled upwards so that it's slope is increased, the contrast of that portion of the curve and the corresponding tones in the image are increased. In the example image seen above, the tone at the indicated point is made much lighter. All of the tones in the image below this point on the curve, and the corresponding tones in the image, are stretched apart and their contrast increased.

This is why it is important to work in a high-bit depth when working with raw images. Because of the strong stretches and increases in contrast that are necessary, tones are pulled apart. If we have a lot of tones, which a high-bit depth allows, they will re-distribute smoothly. If we do not have a lot of tones to work with, we run the risk of posterization and banding when we stretch the data.

In the brightened image, the slope of the top portion of the curve decreases in the highlight areas of the image. This compresses tones and decreases the contrast in those tones in the image.

It is the fact that we can access this data in linear form in a high-bit depth that makes images from DSLR and CCD cameras so powerful for recording astrophotos. It allows us to subtract the sky background and light pollution. It gives us the ability to control the non-linear stretch adjustments to the data. These adjustments will bring out the details in the astronomical object that are hidden deep down in what we would consider to be the shadow areas of a normal photograph.